How energy intensive are AI-generated images?

TLDR: AI images are energy hogs.

Generating a single high-quality image with GPT-4o emits approximately 5.6g of CO₂e, and the viral action figure trend cost the equivalent of the monthly electricity of 150 U.S. homes.

GPT-4o’s image generation uses up to 30x more energy than text.

Image quality, model choice, and how you prompt all matter. A lot. Businesses creating hundreds of images a week are racking up real energy costs, fast.

There’s a lot to unpack. If you’re curious, check out our full paper on image generation and carbon intensity here.

Let’s dig in.

Like millions of others, you've probably seen (or tried) GPT-4o’s new image generation feature. It became so popular it overheated OpenAI’s GPUs, forcing the company to throttle access.

Recently, I wrote about how AI-generated text relies on tokens, chunks of language like words or syllables. The longer your prompt or response, the more energy the model consumes.

But when it comes to images, things get even more compute-heavy.

According to our own data at Scope3, a single high-quality GPT-4o action figure costs 5.6g of CO2e. So, if 1 in 5 active US LinkedIn users iterated with GPT-4o to generate their ideal action figure, Scope3 estimates the trend’s total carbon cost is 716.8 metric tons of CO₂e. That’s the same as 150 homes' electricity use for one year – all in less than a month. It’s no surprise that AI image generation has a much higher carbon cost compared to text-based AI chat: GPT-4o uses up to 30x more energy for image creation vs a text-based AI interaction.

Others have estimated that creating a single high-resolution AI image can use as much energy as charging your smartphone several times.

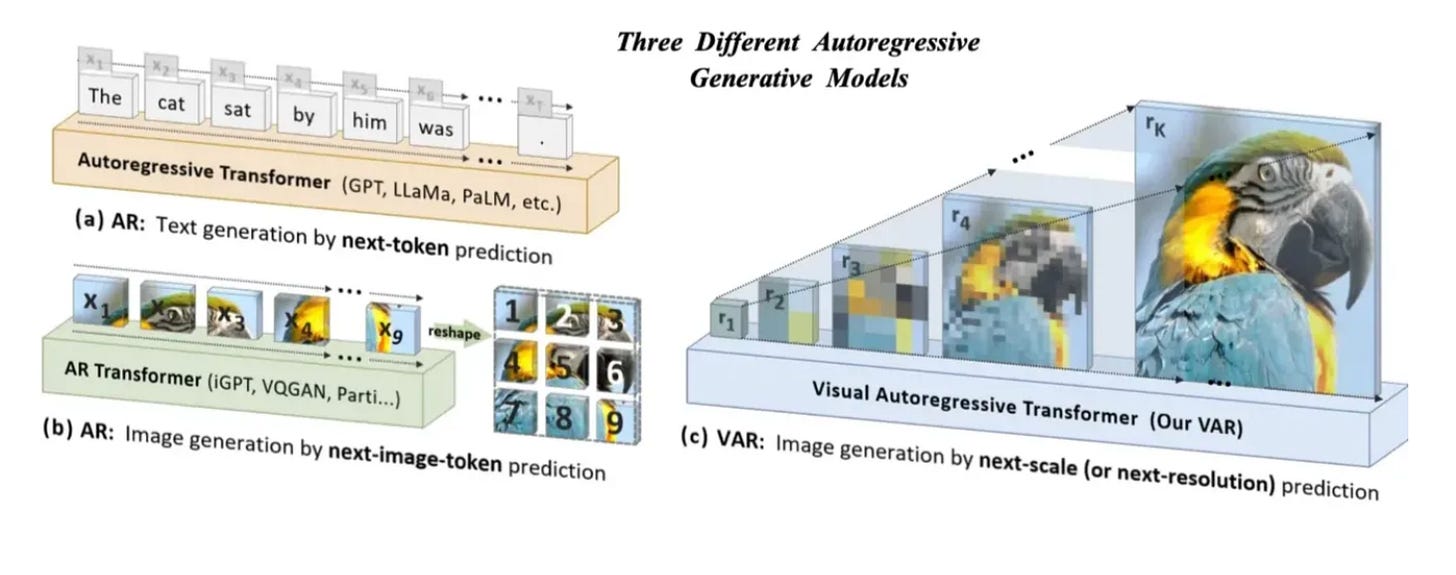

Most popular AI image tools, like DALL·E and Stable Diffusion, create images by starting with random noise and gradually clearing it up, kind of like a photo slowly coming into focus. This process is called diffusion and takes a lot of complex math. GPT-4o works differently. It builds images piece by piece, like putting together a puzzle where each piece depends on the last. This method is more like how text is generated, one word at a time.

GPT-4o lets users choose low, medium, or high quality. That choice dramatically changes the carbon cost, in large part because of the increase in the number of visual tokens the model produced. High-quality images emit:

275% more than medium

1300% more than low

What does this mean for businesses?

For businesses integrating generative AI, these energy disparities translate to significant operational considerations. Imagine a marketing team generating 100 AI visuals a week. That’s like adding a dozen extra laptops to your office power bill, just for images.

Of course, integrating AI could also help streamline operations and avoid other environmental costs (eg reducing the need for business flights, in-person photoshoots, and physical production of marketing materials). We are the beginning of embedding AI into crucial work streams.

That makes this the perfect moment to experiment with intention, and design smarter defaults before waste becomes the norm. Here is a framework to help you guide your thinking as we all delve into the potential of this technology.